Research

Our research group aims at developing methodological and applied research in Artificial Intelligence, Machine Learning and data analysis for intelligent systems. The code of our project is available at https://github.com/Isla-lab

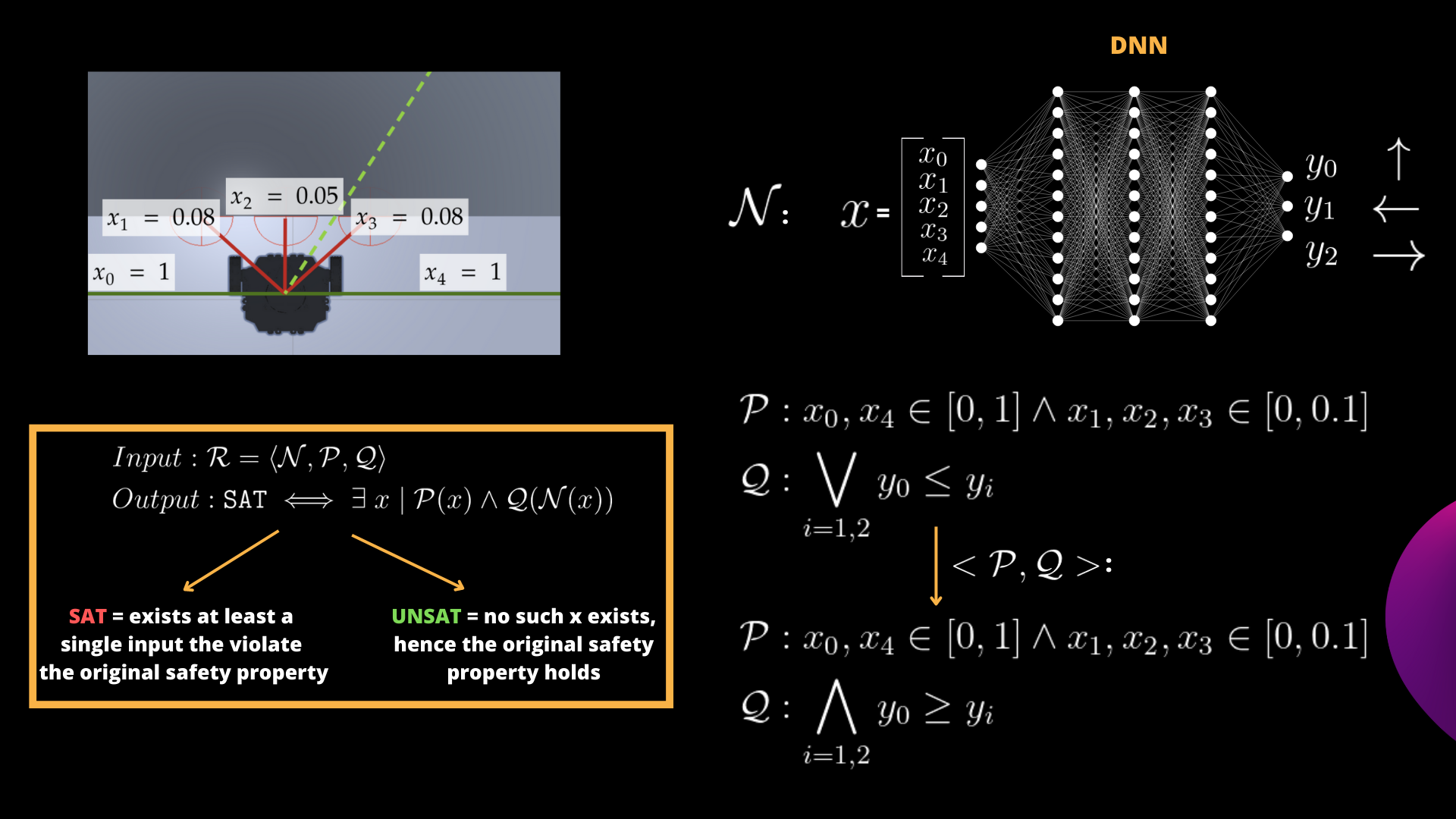

Artificial Intelligence algorithms (Safe Deep Reinforcement Learning) for Autonomous Vehicles

In recent years, Deep Reinforcement Learning (RL) has emerged as a powerful technique to solve complex problems in a variety of applications, reaching and often outperforming classical algorithms and humans. Our research focuses mainly in novel RL methodologies that address the problem of safety, i.e., the application of RL solutions to problems and domains involving interaction with humans, hazardous situations and expensive hardware.

In particular, we propose to exploit DRL to generate a DNN-based real-time controller for the water monitoring drone and mobile robotics.

Click on the image to watch the video

Possible thesis:

- Design, compare and, evaluate state of the art algorithms in the environments proposed by unity.

- Work on safety-critical domains, designing specific environments and algorithms.

- Compare machine learning techniques with other artificial intelligence algorithms, to evaluate which one performs better on a specific task.

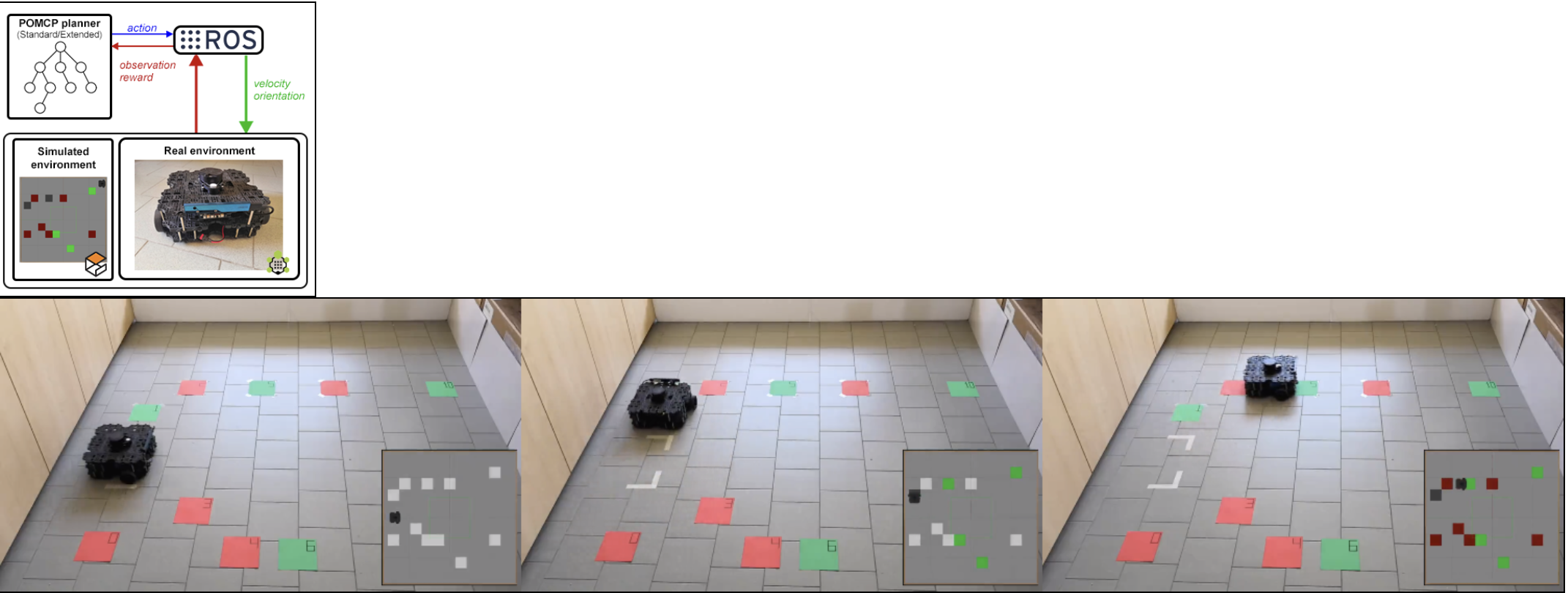

Planning under uncertainty and Explainable Planning

Possible topics in this area: i) Planning under uncertainty: find the best possible action given data received from sensors. An example is to decide the speed that a drone should maintain to make sure it will reach the end of a given path with enough battery. Possible techniques include Markov Decision Processes (MDPs), Partialy Observable MDPs, Monte Carlo methods. Another key aspect is safety. Possible thesis can be on Safe Policy Improvement applied to Monte Carlo methods. ii) Explainable planning: devise methods to explain to a human user the decision of an artificial intelligent system is a key requirement for modern AI. Applying this concept to robotic systems is extremely challenging beacuse one must handle the inherent complexity and uncertainty typical of these systems. Possible techniques include logic based methods to planning and decision making (e.g., Satifiability Modulo Theory, Answer Set Programming, etc.).

Formal Verification of Deep Neural Networks

DNNs have shown impressive performance in various tasks. However, the vulnerability of these models to adversarial inputs is a well-documented phenomenon observed across various applications. Formal Verification (FV) of DNNs uses mathematical methods to rigorously prove that a neural network meets certain safety and reliability requirements expressed as input-output relationships. Our research group is very active in this topic. We developed ProVe (Corsi et al. 2021) an interval propagation-based method and CountingProVe (Marzari, Corsi et al. 2023) a first randomized-approximated algorithm for the #DNN-Verification problem.

Multi-Robot Coordination, Anomaly Detection and Recovery

Implement and evaluate coordination approaches for Multi-robot systems. Design coordination approaches for robotic agents involved in indoor logistic operations (e.g., pickup and delivery). Test the solution on a widely used simulation environments (ROS + stage) and possibly on real platforms (turtlebot, RB-KAIROS);

Click on the image to watch the video

Click on the image to watch the video

Main applications

Water Monitoring with autonomous robotic boats

Build and test various high level control and coordination techniques for autonomous robotic boats for monitoring water conditions in lakes and rivers. This is the main research focus of the INTCATCH 2020 project.

Click on the image to watch the video

Click on the image to watch the video

Click on the image to watch the video

Possible topics: i) intelligent exploration implementation and evaluation of approaches for intelligent water sampling; ii) HRI study of Human Robot Interaction approaches for controlling a team of autonomous boats.

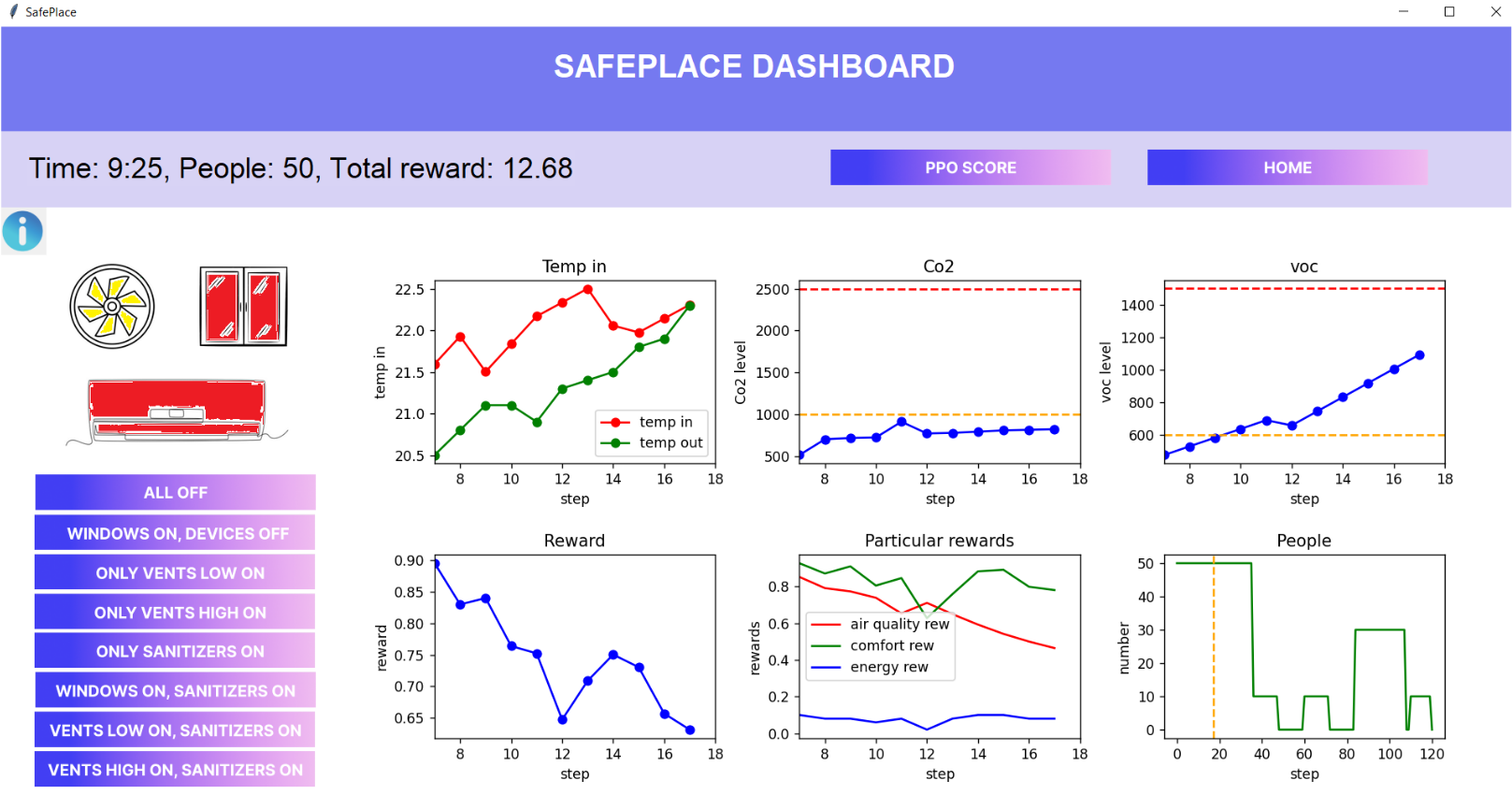

Adaptable Energy Management in Smart Buildings and Smart Grids

Buildings account for approximately 40% of final energy consumption and over one third of CO₂ emissions in the EU, while at the same time representing a largely underutilised source of flexibility for the energy system. The future decarbonisation objectives cannot be achieved by focusing solely on generation-side solutions, but require buildings to become active, adaptive and grid-interactive elements of the energy system. This research focuses on developing methodologies based primarily on reinforcement learning, autonomous planning under uncertainty, and time-series forecasting to reduce the energy, emissions and costs of smart buildings and smart grids. A strong focus is currently on the optimal usage of renewable energies.